Is Science Trustworthy? The Limits of Instrumentation

How bad instrumentation and interpretations thereof lead science astray

The central importance of operationalization to the practice of science means that the scope of science expands as new techniques and devices are invented, allowing previously infeasible observations to be made. One classical example is how the invention of the optical telescope in 1608 revolutionized astronomy, enabling Galileo to observe the moons of Jupiter for the first time the following year. As telescopes became more powerful, and more types were invented, like radio and x-ray telescopes, the variety of data available to catalogue astronomical phenomena increased dramatically, and likewise did the sophistication of astronomical theories. The obverse of this is that if it is not existentially possible to create an operationalization for a proposed concept, then it cannot be investigated scientifically. Possible reasons that an operationalization would be existentially impossible are that it would take too much time (possibly an infinite amount of time), it is too expensive to perform, or it requires an unreachable level of precision.

It is important to note that while measurement of certain concepts may not be possible, either in principle or on account of current technology, it does not perforce indicate that those concepts are irrelevant. It may seem an unjustified leap to conclude that a concept is inconsequential because it cannot be measured, but this is not without historical precedent in positivistic philosophy of science. The behaviourist school, as discussed in the previous part, sought to remove consciousness and mental states from the study of psychology since they could not be operationalized at the time. Methodologically, this is sound, but the philosophical position, as stated by philosopher Carl Hempel that "all psychological statements that are meaningful … are translatable into statements that do not involve psychological concepts but only the concepts of physics [meaning physical behaviour],"[1] is unjustified. In fact Hempel himself later came to disavow this position, saying "In order to characterize … behavioral patterns, propensities, or capacities … we need not only a suitable behavioristic vocabulary, but psychological terms as well."[2]

A more recent and still relevant example is how the concept of culture is used in the social sciences. Culture, as a scientific explanation, has been attacked in some places as being an ideological prop to distract away from unwanted genetic explanations of group and individual behaviour[3], and elsewhere as a vaguely-defined, unfalsifiable black box contrivance that fills in explanatory gaps in the social sciences.[4] The positivistic reaction might be to forbid cultural explanations entirely, and to study group behaviours as the expressions of genes and gene pools. However, denying that culture has a scientifically meaningful non-genetic component is as spurious as denying that consciousness has a meaningful non-behavioural component. The problem remains to operationalize measurements of culture so that its components, which are currently confused and conflated, can be disambiguated. As evolutionary psychologist John Tooby puts it, "[culture and learning] need to be replaced with maps of the diverse cognitive and motivational "organelles" (neural programs) that actually do the work now attributed to [them]."[4] The study of consciousness, though still nascent, has embarked on a similar process, disambiguating different forms of consciousness like cognitive access and perceptual consciousness, each with their own methodologies.[5]

One consequence of the advancement of science through the use of more powerful and complex instruments is that the scientists themselves are no longer able to build or fully understand the instruments they are using. Whereas historically scientists would often craft their own instruments – Galileo for instance personally built the telescope he used to observe the Jovian moons – instruments today are too complex to be built by the scientist, and often require extensive knowledge outside of the domain in which the instrument is used. Biologists, for instance, rarely fully understand the physics of electron microscopy, and they certainly could not themselves build an electron microscope. What they have instead is training on how to apply the technology and interpret the observations that emerge. The lack of a complete first-principles understanding of the techniques and devices applied in science increases the potential for error, since, as already discussed, properly understanding a scientific concept depends on understanding how exactly it is derived or measured.

This treating of experimental instruments as black boxes becomes even more apparent when the instrument is specialized software, which may consist of anywhere from hundreds to millions of lines of code. Scientific software is now used extensively in all areas of science, with some fields like bioinformatics owing their very existence to the advent of the computer. While it is the case that software necessarily meets the requirements for operationalism (all computer programs are essentially a sequence of unambiguous operations executed by the processor), the disconnect between the researcher and the tools he uses can be a source of error in science. One example is some code from a 2014 chemistry study that produced different results depending on the operating system used.[6] The study had been cited over 150 times by the time the bug was discovered in 2019, but a full analysis of the impact of this bug is not available, and is not likely to ever be produced. There are many similar cases across different fields, such as excel corrupting genomic data by converting gene names to dates.[7][8] Nor is this problem limited to bugs or applying software to unintended applications. A 2023 study found that two widely used genomics software libraries produced discordant results due to implementing the same statistical calculations via different methods and with different assumptions. [9][10] This cannot be considered a bug, as the different implementations were deliberately chosen by the library authors, but this is a source of error as scientists are largely not aware of how various algorithms are implemented in software libraries they are employing.1 This is a problem even when the code is open source, as it was in this example, however the trustworthiness of computer analyses is even more suspect when the code is proprietary. For instance, the biotechnology company Illumina currently controls most of the market in next-generation DNA sequencing technology[11], and their sequencing technology and software is proprietary. This means that scientists using this technology must necessarily treat it as a black box and assume it spits out the right answers, because they cannot audit the code for themselves. This introduces the possibility of perverse incentives: measurements can be skewed to support political agendas, and bugs can be swept under the rug or fixed without transparency in order to protect the company's business interests.

Even when instrumentation is working properly the techniques used and the order of their development can substantially influence the direction in which scientific ideas evolve; the growth of scientific knowledge is path dependent. Instruments constitute our way of 'seeing' phenomena that are not observable with our ordinary senses, and so have a profound impact on the theories that are based on those observations. As an analogy, imagine how different our experience and conceptual frameworks would be if we primarily perceived the world through chemical sensing (i.e. taste and smell) as dogs do, as opposed to vision. Immunology serves as a clear example of how the development of scientific theories is dependent on the order in which measurement techniques are developed. There are two classes of immune function within the adaptive immune system: cell-mediated immunity, where T-cells eliminate infected cells, and humoral immunity, where antibodies bind to pathogens. Humoral immunity was discovered first, with the discovery in 1890 that serum from an infected animal could be used to treat disease in other animals (this concept is now broadly applied to produce antivenoms). The use of titers to quantify antibody presence was developed in the 1920s, and was then used to measure immune response to inocculation and vaccination. T-cells on the other hand were only discovered by Jacques Miller in the 1960s, and methods of quantifying them, such as ELISA and flow cytometry, were developed later. Despite their central important in the immune system, their later discovery and the difficulty of quantifying them has resulted in antibodies being the gold standard for determining immunity to the present day, with T-cell based immunity often ignored.[12]

Sometimes, having only one standardized way of measuring something, it may be over-applied even in cases where empirical results do not support its use. When all you have is a hammer, everything looks like a nail. The use of imaging techniques in orthopedics is an example. When patients present to a doctor with joint pain, they are often sent for MRI or x-ray imaging to visualize the tissues in the symptomatic area. If the imaging results indicate soft tissue damage like osteoarthritis, labral tears, or degenerating disks, this is usually blamed for the pain, and treatment is prescribed to deal with the identified problem. However, many studies have indicated that these conditions are routinely found in completely asymptomatic people (and not found in people with symptoms), suggesting that they may not in fact be the cause of pain in symptomatic patients.[13] One study in particular investigated knee biomechanics in both pain-free and symptomatic individuals with the same degree of radiographic arthritis. It found that symptomatic individuals displayed markedly different patterns of muscle activation during walking compared to pain-free individuals, despite both groups having the same radiographic findings.[14] This suggests it may be the muscular activation, as opposed to the arthritis, which is causal in the painful condition. However, it is more difficult to measure this muscle activation, and it has not been standardized in practice like imaging technologies. It also cannot be addressed by the standard tools that orthopedic surgeons employ in their practices, like arthroscopies and joint replacements. Therefore, lacking other convenient standardized measures, imaging continues to be used to diagnose joint pain in practice, despite lacking strong empirical validation.

Medicine is not the only field where the limitations of measurement influence the ontology of scientific theories. Physics, especially where it deals with microscopic, unobservable entities, as in quantum physics, is particularly vulnerable to this. Wave-particle duality is the quintessential example. Depending on the experimental setup, photons may appear to behave like waves (as in diffraction or refraction) or like particles (as in the photoelectric effect or Compton scattering). These are however simply the results of different measurement setups - they say nothing about the underlying mechanics of photons that may have produced the observations. It is analogous to a cylinder casting a square shadow when lit from one angle and a circular shadow when lit from another. This is not an expression of a fundamental square-circle duality, it is a simple fact of how physical objects may appear differently when examined in different ways.

In his 1969 autobiography Der Teil und das Ganze (The Part and the Whole) Heisenberg describes the philosophy that lead to the acceptance of wave-particle duality in the physics community:

While in Europe the non-intuitive features of the new atomic theory, the dualism between particle and wave concepts, the merely statistical character of the laws of nature, usually led to heated discussions, sometimes to bitter rejection of the new ideas, most American physicists seemed ready to accept the new perspective without any hesitation.[15]

Heisenberg then describes the American style of thought as expressed by his tennis partner Barton, an experimental physicist from Chicago:

"You Europeans, and especially you Germans, tend to take such knowledge so terribly fundamentally. We see it much more simply. Previously, Newtonian physics was a sufficiently accurate description of the observed facts. Then electromagnetic phenomena were discovered, and it turned out that Newtonian mechanics was not adequate for them, but Maxwell's equations were sufficient for the description of these phenomena for the time being. Finally, the study of atomic processes has shown that one does not arrive at the observed results by applying classical mechanics and electrodynamics. So, it was necessary to improve the previous laws or equations, and that's how quantum mechanics was created. Basically, the physicist, even the theorist, simply behaves like an engineer who is supposed to design a bridge. Let's say he notices that the static formulas that had been used so far are not quite sufficient for his new construction. He has to make corrections for wind pressure, for aging of the material, temperature fluctuations, and the like, which he can incorporate into the existing formulas. With this, he arrives at better formulas, more reliable construction regulations, and everyone will be happy about the progress. But fundamentally, nothing has actually changed. So it seems to me also in physics. Perhaps you make the mistake of declaring the laws of nature to be absolute, and then you are surprised when they must be changed. Even the term 'natural law' seems to me a questionable glorification or sanctification of a formulation, which in essence is only a practical prescription for dealing with nature in the relevant area."[15]

This excerpt is from Chapter 8, appropriately titled Atomic Physics and Pragmatic Thinking. Indeed the American attitude evinces pragmatic, practical thinking — expressing skepticism of the very existence of laws of nature, and likening them instead to an engineer's heuristics. This attitude reflects instrumentalism rather than realism in science. Instrumentalism, as a nonrealist philosophy, is useful in engineering but is anathema to real progress in science, since it obviates scientific theory and explanation. Karl Popper had a similar critique, that instrumentalism reduces pure science to merely applied science.[16] Indeed instrumentalism is incompatible with the notion that the equations of quantum physics describe in any way the underlying character of the physical world. It is philosophically incoherent to suggest that equations are nothing more than practical tools for working with natural phenomena yet are simultaneously describing the actual fundamental nature of reality. From the instrumentalist perspective all that can be reasonably said is that in order to make the best predictions, we may apply mathematical formalisms that treat matter either as particles or waves, depending on the circumstance.

Similar confusions permeate the received view of quantum physics. For one, the Heisenberg Uncertainty Principle is the result of the position and momentum operators being conjugate variables in a Fourier transform2, which is a fact of the mathematics being used, not an indicator of ontological reality. For another, the observer effect in physics is directly related to certain measurements necessarily interfering with the system being measured, which is a practical and operational fact, not an indicator of quantum spookiness.

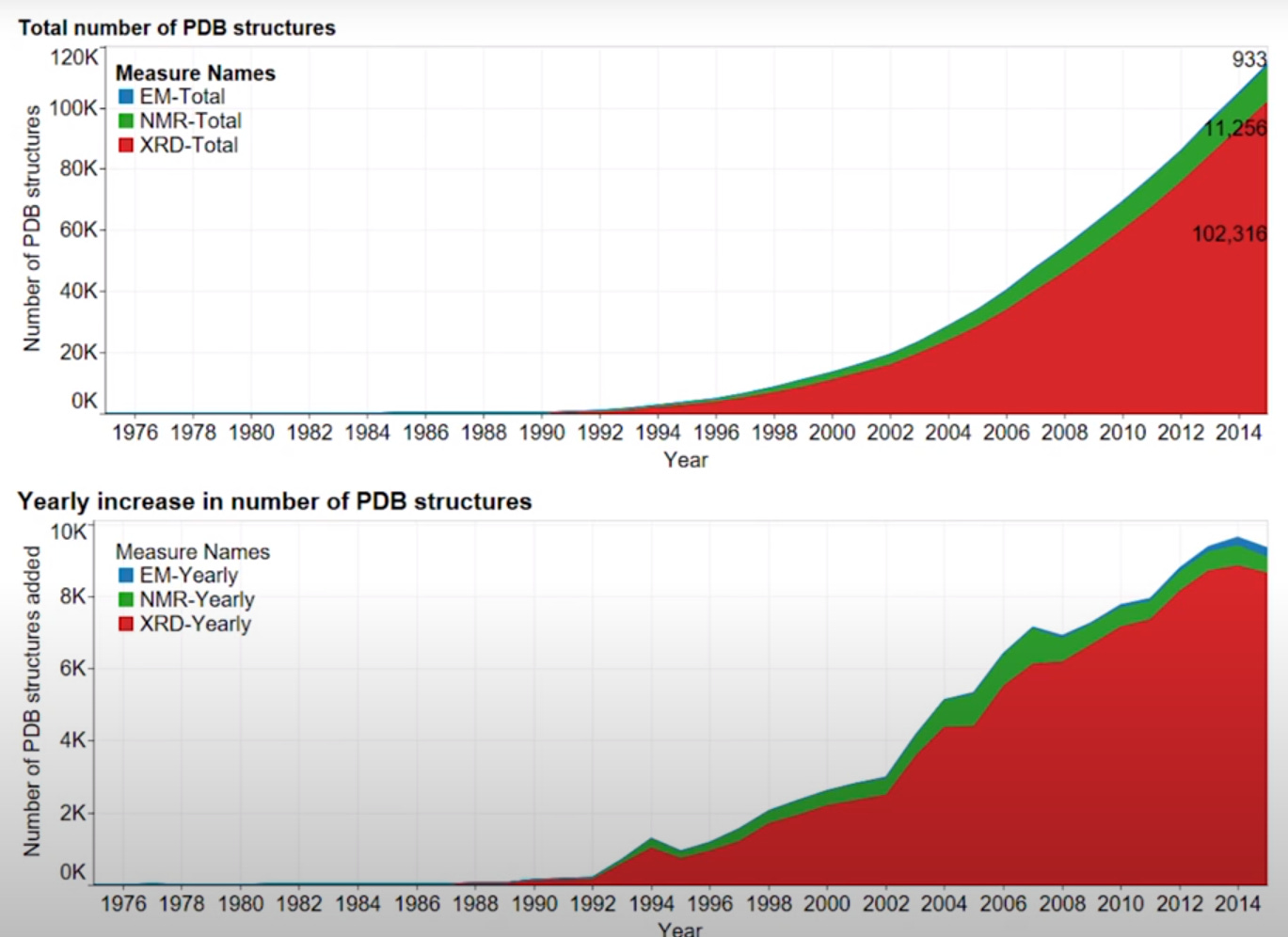

Measurement can also influence ontology when the conditions of measurement are systematically different from the conditions under which the objects of study usually exist. This can be a result of experiment design, for instance when animals are studied in captivity instead of the wild, but it can also be a result of the method of measurement itself. For instance, there are currently three different methods for inferring protein structure. The first method to be developed was X-ray crystallography, which was employed in 1912 to analyze copper sulfate, and first used to characterize a protein in 1958. The problem with X-ray crystallography is that the protein must first be crystallized to apply the technique, where the techniques used to create suitable crystals can vary widely depending on the protein, and proteins do not naturally exist in a crystal state. Additionally, many proteins in biological systems are embedded in membranes, which substantially alter the structure of certain protein domains like cytoplasmic loops.[17] Thus, the results of X-ray crystallography alone are not sufficient to accurately characterize the structure of a significant subset of proteins. Starting in 1985 NMR spectroscopy has also been used to infer protein structure, with the added benefit that it can identify dynamic regions of the protein, however the technique is only viable for small proteins. In the last decade advances in the resolution offered by electron microscopy have enabled its use in determining protein structure, with the added benefit that it can be used to image proteins embedded in membranes. However, only a small minority of proteins have been imaged using this technique, as it is novel and the computational resources required are expensive.

This discordance between the conditions of measurement and the usual conditions of existence led neurobiologist Harold Hillman to assert that observations made under natural conditions must take precedence over observations made in artificial lab conditions. Hillman was strongly skeptical of staining techniques in optical and electron microscopy, and how the stains altered the biological tissues being imaged. He considered the endoplasmic reticulum, first observed by electron microscopy in 1945, to be an artefact of cell staining, and in any case to be incompatible with cytoplasmic streaming (the movement of intracellular components, often at varying speeds) observed in living cells.[18] While live-cell fluorescent microscopy has not supported the notion that the endoplasmic reticulum is purely an artefact, it equally has not supported the theory of the endoplasmic reticulum as a relatively static matrix - it is instead in constant motion within the cell.[19] The cited paper does however note that the fragmentation of the endoplasmic reticulum observed during mitosis is in fact an artefact of cell fixation, and the phenomenon cannot be seen in living cells.

Perhaps the worst fields for using invalid instruments are the social sciences that rely on tests of abstract psychological or social constructs. Such tests are scientific instruments, but, unlike microscopes or spectrometers, their validation requires complex theoretical and statistical argumentation. This is related to, but separate from, construct validity as previously discussed. Some key factors that must be ascertained when evaluating a psychological test are as follows:

Test-retest reliability: If a construct like intelligence or personality is supposed to be stable over time, then the results of taking the same test after an intervening period should correlate strongly.

Inter-rater reliability: For scales that can be completed by a third party, such as personality scales, different third parties should get similar results. This demonstrates that the scale is relatively unambiguous and replicable across individuals.

Measurement invariance: When the same test is applied to different groups (e.g. age, gender, ethnicity, etc.) it should function the same way. That is, the relationship between performance on the test and the underlying construct you are measuring should be the same across groups. This may be violated for reasons like differential item functioning, when answers systematically vary between groups due to test bias.[20]

Internal consistency: A high internal consistency, often calculated by Cronbach's alpha3, indicates that the various items on the scale are coherently representing the same underlying construct. A scale with poor internal consistency may have spurious items that are not reflective of the construct the test purports to measure.

Factor structure: Factor analysis assesses the structure of the correlations of items on a test battery. For instance, IQ tests display a "positive manifold" where all subtest scores are positively correlated with all other scores. Consequently there is only one factor, named g, which is required to explain most of the variation in IQ test scores. In general the number of factors required to satisfactorily explain the variation in test results should be the same as the number of independent constructs the test purports to measure.

One example of a test with questionable validity, despite widespread use in the academic literature, is the Reading the Mind in the Eyes test. The test was originally developed in 2001 as a measure of social cognitive ability for use in studying autism, and by 2023 the original paper had been cited over seven thousand times. A scoping review in that year found that out of 1461 studies that employed the test, 63% neither collected nor reported any validity evidence to support its use.[21] This is important because, as the paper makes clear, "validity is not a property of a test, but rather an assessment of the interpretability of a test score for a particular use in a particular sample." This is even more concerning because the review found that 61.4% of the tests used had been modified from the original form, either by to adding or removing items or answer choices, adding a time limit, or translating it into another language. Worse still, when new validity evidence was collected, it was frequently substandard: 58% of Cronbach's alpha values were below the accepted minimum, and only one out of thirteen studies that assessed the factor structure of the test found it to be adequately explained by a single factor.

The Reading the Mind in the Eyes test is but one example of the use of invalid instruments in psychology. It is common knowledge that the history of psychology is littered with tests that were later discarded as worthless, but as this example proves this is still a problem in modern psychology, and strong arguments have been made that other popular modern tests like the Implicit Association Test also lack validity.[22] Reviews of questionable measurement practices in the psychological literature as a whole find that they are "ubiquitous and highly problematic"[23] and reviews of specific practices like measurement invariance testing find irreproducible and substandard results.[24] This has certainly contributed to the replication crisis in the social sciences. Tests are often constructed to investigate specific theories, and theories can also arise in order to explain the observed results of tests. Having invalid tests vitiates this entire process. Measurement is the place where the rubber hits the road in the actual practice of science, so the use of improper or invalid measures means that all results deriving from those measures are baseless.

There are then two categories of ways in which instrumentation can lead science astray. The first and most obvious is simply the use of instruments that are invalid. In the same class but less deleterious is choosing instruments based on convenience or precedence in the literature (which may itself be due to convenience), rather than it truly being the best instrument for the job. The other category of problems is in the erroneous interpretation of results derived from instruments. This can be due to conflating a limitation of the particular measurement with fundamental fact about reality. It can also be due to the measurement environment being necessarily different from the natural environment, leading to qualitatively different results that cannot be used to support inferences in a naturalistic setting. These issues can be eliminated, at least in principle, by careful validation of instruments, and by nuanced interpretation of the results. The next essay will discuss actual measurement error, which may be reduced but never eliminated, even in principle.

Hempel, C. (1949). The Logical Analysis of Psychology. In H. Feigl and W. Sellars (Eds.), Readings in Philosophical Analysis (pp. 373–84). New York: Appleton-Century-Crofts.

Hempel, C. (1966). Philosophy of Natural Science (pp. 110). Englewood Cliffs, N. J.: Prentice-Hall.

MacDonald, K. B. (2002). The Boasian School Of Anthropology And The Decline Of Darwinism In The Social Sciences. In The Culture of Critique: An Evolutionary Analysis of Jewish Involvement in Twentieth-century Intellectual and Political Movements (pp. 20-49). 1stBooks.

Tooby, J. (n.d.). What scientific idea is ready for retirement? Retrieved July 22, 2023, from https://www.edge.org/response-detail/25343

Block, N. (2014). Consciousness, Big Science and Conceptual Clarity. In G. Marcus & J. Freeman (Eds.), In The Future of the Brain: Essays by the World?s Leading Neuroscientists (pp. 161–176). Princeton University Press. https://philarchive.org/rec/BLOCBS

October 2019, K. K. (n.d.). Structures in more than 150 papers may be wrong thanks to NMR coding glitch. Chemistry World. Retrieved February 22, 2024, from https://www.chemistryworld.com/news/structures-in-more-than-150-papers-may-be-wrong-thanks-to-nmr-coding-glitch/4010413.article

Zeeberg, B. R., Riss, J., Kane, D. W., Bussey, K. J., Uchio, E., Linehan, W. M., Barrett, J. C., & Weinstein, J. N. (2004). Mistaken Identifiers: Gene name errors can be introduced inadvertently when using Excel in bioinformatics. BMC Bioinformatics, 5(1), 80. https://doi.org/10.1186/1471-2105-5-80

Lewis, D. (2021). Autocorrect errors in Excel still creating genomics headache. Nature. https://doi.org/10.1038/d41586-021-02211-4

Moses, L., Einarsson, P. H., Jackson, K., Luebbert, L., Booeshaghi, A. S., Antonsson, S., Bray, N., Melsted, P., & Pachter, L. (2023). Voyager: Exploratory single-cell genomics data analysis with geospatial statistics (p. 2023.07.20.549945). bioRxiv. https://doi.org/10.1101/2023.07.20.549945

Pachter, L. (2023). A 🧵 on why Seurat and Scanpy's log fold change calculations are discordant. Twitter/Thread Reader. https://threadreaderapp.com/thread/1694387749967847874.html

Illumina (ILMN) Leads the Market With 80% Share. (2024, January 30). Yahoo Finance. https://finance.yahoo.com/news/illumina-ilmn-leads-market-80-123127888.html

Schwarz, M., Mzoughi, S., Lozano-Ojalvo, D., Tan, A. T., Bertoletti, A., & Guccione, E. (2022). T cell immunity is key to the pandemic endgame: How to measure and monitor it. Current Research in Immunology, 3, 215–221. https://doi.org/10.1016/j.crimmu.2022.08.004

Lee, A. J. J., Armour, P., Thind, D., Coates, M. H., & Kang, A. C. L. (2015). The prevalence of acetabular labral tears and associated pathology in a young asymptomatic population. The Bone & Joint Journal, 97-B(5), 623–627. https://doi.org/10.1302/0301-620X.97B5.35166

Astephen Wilson, J. L., Stanish, W. D., & Hubley-Kozey, C. L. (2017). Asymptomatic and symptomatic individuals with the same radiographic evidence of knee osteoarthritis walk with different knee moments and muscle activity. Journal of Orthopaedic Research: Official Publication of the Orthopaedic Research Society, 35(8), 1661–1670. https://doi.org/10.1002/jor.23465

Heisenberg, W. (1969). Atomphysik und pragmatische Denkweise. In Der Teil und das Ganze: Gespräche im Umkreis der Atomphysik (pp. 49-53). R. Piper. (Translated by ChatGPT).

Popper, K. R. (2002 [1963]). Conjectures and Refutations: The Growth of Scientific Knowledge (pp. 149). Psychology Press.

University of Arkansas. Limitations of method for determining protein structure. ScienceDaily. ScienceDaily, 8 October 2019. www.sciencedaily.com/releases/2019/10/191008115923.htm.

Hillman, H., & Sartory, P. (1980). The Living Cell: A Re-examination of Its Fine Structure. Packard Pub.

Costantini, L., & Snapp, E. (2013). Probing Endoplasmic Reticulum Dynamics using Fluorescence Imaging and Photobleaching Techniques. Current Protocols in Cell Biology / Editorial Board, Juan S. Bonifacino ... [et Al.], 60, 21.7.1-21.7.29. https://doi.org/10.1002/0471143030.cb2107s60

Kirkegaard, E. O. W. (2022, April 15). Brief introduction to test bias in mental testing.

Higgins, W. C., Kaplan, D. M., Deschrijver, E., & Ross, R. M. (2023). Construct validity evidence reporting practices for the Reading the mind in the eyes test: A systematic scoping review. Clinical Psychology Review, 102378. https://doi.org/10.1016/j.cpr.2023.102378

Schimmack, U. (2021). The Implicit Association Test: A Method in Search of a Construct. Perspectives on Psychological Science, 16(2), 396–414. https://doi.org/10.1177/1745691619863798

Flake, J. K., & Fried, E. I. (2020). Measurement Schmeasurement: Questionable Measurement Practices and How to Avoid Them. Advances in Methods and Practices in Psychological Science, 3(4), 456–465. https://doi.org/10.1177/2515245920952393

Maassen, E., D’Urso, E. D., Assen, M. A. L. M. van, Nuijten, M. B., Roover, K. D., & Wicherts, J. (2022). The Dire Disregard of Measurement Invariance Testing in Psychological Science. OSF. https://doi.org/10.31234/osf.io/n3f5u

It should be noted that even if the magnitude of the errors caused by these bugs is small, it can sometimes have a large effect on the fidelity of derived results in that domain. For instance, changing physical constants by 2% can have cascading consequences that cause derived terms to diverge widely from reality. Thus, it cannot be said to be trivial when flawed programs produce incorrect results in science.

Roughly speaking, Cronbach's alpha calculates the ratio between sum of the variances for each item in the scale, and the variance of the total scale score. The scale is more reliable when the variance of the total scale score dominates.

'One consequence of the advancement of science through the use of more powerful and complex instruments is that the scientists themselves are no longer able to build or fully understand the instruments they are using.'

Spot on. You can calibrate the hardware and program the software to generate a desired outcome. Fraud is easier the more complex the appliance or maths.

Errata: The paragraph on determining protein structure with X-ray crystallography has been updated to fix some errors in the description of the technique.